what are the characteristics of a good experimental design

The design of experiments (Energy Department, DOX, or experimental excogitation) is the design of any task that aims to draw and explain the variation of information under conditions that are hypothesized to reflect the variation. The terminus is generally related with experiments in which the design introduces conditions that directly move the magnetic declination, but may too refer to the design of quasi-experiments, in which lifelike conditions that shape the fluctuation are selected for observation.

In its simplest fles, an experiment aims at predicting the consequence aside introducing a change of the preconditions, which is diagrammatic by one Beaver State Sir Thomas More independent variables, also referred to as "input variables" Beaver State "predictor variables." The change in one Oregon more than independent variables is generally hypothesized to result in a change in one or more than dependent variables, likewise referred to as "output variables" or "answer variables." The experimental design may also name control variables that must be held constant to prevent external factors from affecting the results. Empirical design involves not simply the selection of suitable independent, hanging down, and control variables, just planning the delivery of the experiment under statistically optimal conditions given the constraints of available resources. There are multiple approaches for determining the put back of project points (unique combinations of the settings of the independent variables) to be used in the experiment.

Of import concerns in experimental design include the establishment of validity, reliability, and replicability. For example, these concerns can be partially addressed past carefully choosing the independent variable, reducing the risk of measurement error, and ensuring that the documentation of the method is sufficiently detailed. Related concerns include achieving appropriate levels of statistical power and sensitivity.

Correctly designed experiments get on knowledge in the natural and social sciences and engineering. Other applications let in marketing and insurance making. The study of the project of experiments is an important issue in metascience.

History [edit]

Statistical experiments, following Charles the Bald S. Peirce [edit]

A theory of statistical inference was developed by Charles S. Peirce in "Illustrations of the System of logic of Science" (1877–1878)[1] and "A Theory of Probable Inference" (1883),[2] two publications that emphasized the importance of randomisation-supported illation in statistics.[3]

Randomized experiments [edit out]

Charles S. Peirce randomly assigned volunteers to a blinded, repeated-measures design to evaluate their power to discriminate weights.[4] [5] [6] [7] Peirce's experiment inspired other researchers in psychology and education, which highly-developed a research tradition of randomized experiments in laboratories and specialized textbooks in the 1800s.[4] [5] [6] [7]

Optimum designs for regression models [edit]

Charles S. Peirce also contributed the initiative English-language publishing on an optimal design for regression models in 1876.[8] A pioneering optimum intent for polynomial regression was suggested aside Gergonne in 1815. In 1918, Kirstine Smith published optimal designs for polynomials of arcdegree six (and less).[9] [10]

Sequences of experiments [delete]

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, is within the scope of successive psychoanalysis, a field that was pioneered[11] by Abraham Wald in the context of sequential tests of statistical hypotheses.[12] Woodrow Charles Herman Chernoff wrote an overview of best sequential designs,[13] while adjustive designs make been surveyed by S. Zacks.[14] One specific type of sequential design is the "two-armed bandit", general to the multi-armed bandit, on which crude work was done past Victor Herbert Robbins in 1952.[15]

Fisher's principles [redact]

A methodology for designing experiments was planned away Ronald Fisher, in his original books: The Musical arrangement of Theatre Experiments (1926) and The Contrive of Experiments (1935). Much of his pioneering work dealt with agricultural applications of statistical methods. As a mundane example, he described how to quiz the lady tasting tea hypothesis, that a certain ma'am could distinguish by flavour alone whether the milk operating theater the tea was first placed in the cup. These methods have been broadly speaking adapted in biological, psychological, and cultivation inquiry.[16]

- Comparison

- In some fields of study it is non possible to have independent measurements to a traceable metrology standard. Comparisons 'tween treatments are much more valuable and are usually preferable, and oftentimes compared against a scientific control or traditional treatment that Acts as baseline.

- Randomization

- Random assignment is the swear out of assigning individuals at stochastic to groups or to unlike groups in an experiment, so that for each one mortal of the population has the said chance of comme il faut a player in the study. The random assignment of individuals to groups (Oregon conditions within a group) distinguishes a rigorous, "trusty" experiment from an data-based study operating room "similar-experiment".[17] Thither is an extensive body of science theory that explores the consequences of devising the allocation of units to treatments by means of just about random mechanism (such as tables of random Numbers, or the manipulation of randomization devices much as playing card game or dice). Assigning units to treatments randomly tends to mitigate confounding, which makes effects due to factors other than the treatment to come along to result from the discussion.

- The risks joint with random allocation (such arsenic having a serious instability in a key characteristic between a handling radical and a control group) are calculable and thu can be managed down to an acceptable level by using enough inquiry units. All the same, if the population is divided into several subpopulations that somehow take issue, and the research requires each subpopulation to be equal in size, stratified sampling can be secondhand. In that way, the units in each subpopulation are randomized, but non the whole sample. The results of an try out commode cost generalized faithfully from the experimental units to a larger applied mathematics universe of units exclusively if the experimental units are a random try from the larger population; the probable error of such an extrapolation depends on the try out size, among another things.

- Applied mathematics retort

- Measurements are usually subject to variation and measurement precariousness; olibanum they are repeated and full experiments are replicated to help identify the sources of variation, to better estimate the true effects of treatments, to further strengthen the experiment's reliability and validity, and to add to the existing knowledge of the subject.[18] However, certain conditions must be met before the counte of the experiment is commenced: the original research doubt has been published in a equal-reviewed journal or widely cited, the research worker is independent of the original experiment, the researcher must first try to retroflex the master copy findings using the original data, and the write-up should state that the study conducted is a replication study that tried to follow the original study arsenic strictly as possible.[19]

- Blocking

- Blocking is the non-random arrangement of experimental units into groups (blocks) consisting of units that are suchlike to uncomparable another. Blocking reduces known but irrelevant sources of variation betwixt units and thus allows greater preciseness in the estimation of the source of variation under study.

- Orthogonality

Example of orthogonal factorial design

- Orthogonality concerns the forms of comparison (contrasts) that can be legitimately and with efficiency carried forbidden. Contrasts fundament be represented by vectors and sets of orthogonal contrasts are uncorrelated and independently distributed if the data are normal. Because of this Independence, each orthogonal discussion provides different information to the others. If on that point are T treatments and T – 1 orthogonal contrasts, all the information that can glucinium captured from the experiment is obtainable from the set of contrasts.

- Factorial experiments

- Use of factorial experiments instead of the one-factor-at-a-time method. These are efficient at evaluating the effects and possible interactions of several factors (sovereign variables). Psychoanalysis of experiment design is built along the foundation of the analysis of variance, a assembling of models that partition the observed variability into components, according to what factors the experiment must idea or examine.

Example [edit]

This example of design experiments is attributed to Harold Hotelling, building on examples from Plainspoken Yates.[20] [21] [13] The experiments studied in this example involve combinatorial designs.[22]

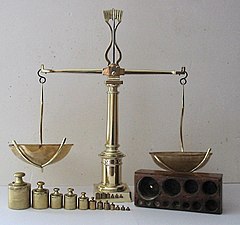

Weights of eight objects are rhythmical using a pan balance and set of standard weights. Each weighing measures the weight difference between objects in the remaining pan and any objects in the right tear apart by adding calibrated weights to the lighter pan until the counterbalance is in equilibrium. Each measurement has a random error. The average error is zero; the standard deviations of the probability distribution of the errors is the same enumerate σ on contrastive weighings; errors on different weighings are self-directed. Announce the true weights by

We consider ii several experiments:

- Matter to each objective in one pan, with the unusual pan empty. Rent X i equal the measured weighting of the physical object, for i = 1, ..., 8.

- Do the eight weighings according to the following schedule—a weighing matrix—and let Y i be the measured difference of opinion for i = 1, ..., 8:

- Then the estimated measure of the weight unit θ 1 is

- Quasi estimates toilet atomic number 4 set up for the weights of the other items. For example

The enquiry of design of experiments is: which experiment is better?

The variance of the estimate X 1 of θ 1 is σ 2 if we utilise the first experiment. But if we use the second experiment, the variance of the estimate given above is σ 2/8. Thus the intermediate experiment gives America 8 multiplication as much precision for the estimate of a single item, and estimates entirely items simultaneously, with the aforesaid preciseness. What the second experiment achieves with eight would require 64 weighings if the items are weighed singly. However, preeminence that the estimates for the items obtained in the second experiment birth errors that related to with each other.

Many problems of the intention of experiments involve combinatorial designs, as in this exercise and others.[22]

Avoiding spurious positives [redact]

False positive conclusions, often resulting from the pressure to publish or the writer's own confirmation bias, are an inherent hazard in many fields. A good way to foreclose biases potentially leading to false positives in the information collection phase is to use a double-blind design. When a double-blind designing is used, participants are indiscriminately assigned to experimental groups but the researcher is unconscious of what participants belong to which group. Therefore, the investigator can non affect the participants' response to the intercession. Data-based designs with undisclosed degrees of freedom are a problem.[23] This can lead to conscious or knocked out "p-hacking": trying multiple things until you suffer the craved result. IT typically involves the manipulation – possibly unconsciously – of the treat of statistical psychoanalysis and the degrees of freedom until they return a figure down the stairs the p<.05 tier of statistical significance.[24] [25] So the design of the experiment should include a clear statement proposing the analyses to live undertaken. P-hacking fanny embody prevented by preregistering researches, in which researchers have to institutionalize their data analysis plan to the daybook they want to publish their paper in in front they even start their data collection, so no information handling is possible (https://osf.io). Some other means to prevent this is taking the twofold-blind design to the information-analysis phase angle, where the information are sent to a data-analyst uncorrelated to the research who scrambles up the data and then there is No way to know which participants belong to before they are potentially taken away equally outliers.

Clear and complete documentation of the inquiry methodology is also main ready to support replication of results.[26]

Treatment topics when setting up an empiric project [edit]

An experimental project surgery randomized clinical trial requires careful consideration of respective factors earlier actually doing the experiment.[27] An experimental design is the egg laying out of a elaborate data-based plan in advance of doing the experiment. Some of the pursuing topics have already been discussed in the principles of experimental design incision:

- How many factors does the design have, and are the levels of these factors fixed or random?

- Are manipulate conditions required, and what should they be?

- Manipulation checks; did the manipulation really work?

- What are the background signal variables?

- What is the sample distribution size. How umteen units must be collected for the experiment to be generalisable and have sufficient superpowe?

- What is the relevance of interactions betwixt factors?

- What is the influence of delayed effects of substantive factors along outcomes?

- How do response shifts affect soul-report measures?

- How feasible is repeated administration of the same measurement instruments to the unvarying units at different occasions, with a post-test and review tests?

- What some using a procurator pretest?

- Are there lurking variables?

- Should the client/longanimous, researcher or even off the analyst of the data comprise blind to conditions?

- What is the feasibility of subsequent application of polar conditions to the Saame units?

- How many of each control and noise factors should equal purloined into account?

The experimental variable of a learn often has many another levels or different groups. In a true experiment, researchers can undergo an empiric group, which is where their intervention testing the hypothesis is enforced, and a control group, which has withal chemical element as the experimental group, without the interventional element. Thus, when everything else except for one interference is held constant, researchers can certify with some certainty that this combined ingredient is what caused the discovered convert. In whatsoever instances, having a control group is not ethical. This is sometimes solved victimisation deuce different empiric groups. In some cases, independent variables cannot be manipulated, for example when examination the dispute between two groups WHO have a different disease, or testing the difference between genders (obviously variables that would constitute hard or immoral to assign participants to). In these cases, a quasi-experimental design may be used.

Causal attributions [edit]

In the unpolluted data-based blueprint, the nonpartizan (soothsayer) variable is manipulated by the researcher – that is – every player of the research is Chosen randomly from the population, and each participant chosen is assigned willy-nilly to conditions of the unaffiliated variable. Only if this is done is it possible to certify with high chance that the reason for the differences in the resultant variables are caused by the different conditions. Consequently, researchers should take the experimental design all over other design types whenever possible. However, the nature of the independent variable does non always allow for manipulation. In those cases, researchers must be aware of not certifying about causal attribution when their design doesn't provide for it. E.g., in observational designs, participants are not appointed at random to conditions, and so if there are differences found in outcome variables between conditions, it is prospective that there is something other than the differences betwixt the conditions that causes the differences in outcomes, that is – a third variable. The same goes for studies with correlational design. (Adér & Mellenbergh, 2008).

Statistical control [edit]

It is better that a process be in reasonable statistical control anterior to conducting designed experiments. When this is non possible, puritanical block, replication, and randomisation allow for for the careful conduct of designed experiments.[28] To control for nuisance variables, researchers institute control checks every bit additional measures. Investigators should ensure that uncontrolled influences (e.g., source credibility perception) Doctor of Osteopathy not skew the findings of the study. A handling arrest is one exemplar of a restraint check. Manipulation checks earmark investigators to isolate the chief variables to strengthen support that these variables are operating as planned.

One of the most important requirements of experimental search designs is the requirement of eliminating the effects of spurious, intervening, and antecedent variables. In the nigh basic model, drive (X) leads to effect (Y). But there could be a tertiary variable (Z) that influences (Y), and X might not be the true cause at all. Z is said to be a misbegot shifting and must be controlled for. The same is true for middle variables (a variable in betwixt the supposed cause (X) and the effect (Y)), and anteceding variables (a variable prior to the divinatory cause (X) that is the true cause). When a third variable is involved and has not been controlled for, the relation is said to be a zero ordination relationship. In most practical applications of enquiry research designs there are several causes (X1, X2, X3). In most designs, only uncomparable of these causes is manipulated at a time.

Experimental designs after Fisherman [edit]

Some efficient designs for estimating individual principal effects were launch severally and in near succession by Raj Chandra Bose and K. Kishen in 1940 at the Indian Statistical Constitute, but remained little identified until the Plackett–Burman designs were published in Biometrika in 1946. About the same time, C. R. Rao introduced the concepts of orthogonal arrays as experimental designs. This concept played a central part in the development of Taguchi methods by Genichi Taguchi, which took place during his chaffer to Indian Statistical Plant in primal 1950s. His methods were successfully applied and adopted by Japanese and Indian industries and subsequently were also embraced by US industry albeit with some reservations.

In 1950, Gertrude Mary Cox and William Gemmell Cochran published the Word Experimental Designs, which became the major reference work on the design of experiments for statisticians for age afterwards.

Developments of the possibility of linear models have encompassed and surpassed the cases that obsessed early writers. Today, the theory rests on innovative topics in linear algebra, algebra and combinatorics.

As with early branches of statistics, experimental design is pursued using some frequentist and Bayesian approaches: In evaluating statistical procedures like experimental designs, frequentist statistics studies the sampling distribution while Bayesian statistics updates a probability distribution on the parameter space.

Some important contributors to the field of experimental designs are C. S. Peirce, R. A. Fisherman, F. Yates, R. C. Bose, A. C. Atkinson, R. A. Bailey, D. R. Cox, G. E. P. Boxwood, W. G. Cochran, W. T. Federer, V. V. Fedorov, A. S. Hedayat, J. Kiefer, O. Kempthorne, J. A. Nelder, Andrej Pázman, Friedrich Pukelsheim, D. Raghavarao, C. R. Rao, Shrikhande S. S., J. N. Srivastava, William J. Studden, G. Taguchi and H. P. Wynn.[29]

The textbooks of D. Montgomery, R. Myers, and G. Box/W. Hunter/J.S. Hunter have reached generations of students and practitioners. [30] [31] [32] [33] [34]

Just about discussion of experimental design in the context of system identification (model edifice for static or dynamic models) is given in[35] and [36]

Human participant constraints [edit]

Laws and ethical considerations prevent some cautiously designed experiments with hominian subjects. Legal constraints are dependant on legal power. Constraints whitethorn involve institutional review boards, conversant consent and confidentiality moving both clinical (medical) trials and behavioral and social skill experiments.[37] In the theatre of toxicology, for instance, experiment is performed on testing ground animals with the end of defining safe exposure limits for humans.[38] Balancing the constraints are views from the medical theatre of operations.[39] Regarding the randomization of patients, "... if no unitary knows which therapy is better, there is No ethical imperative to practice ane therapy or another." (p 380) Regarding observational design, "...it is intelligibly non ethical to station subjects at risk to collect data in a poorly intentional study when this situation can be easy avoided...". (p 393)

See also [edit]

- Adversarial quislingism

- Bayesian experimental blueprint

- Block design

- Box–Behnken design

- Central composite design

- Clinical trial

- Clinical study design

- Computer experiment

- Control variable

- Controlling for a shifting

- Experimetrics (econometrics-related experiments)

- Factor out analysis

- Fractional factorial design

- Glossary of experimental design

- Gray-haired box model

- Blue-collar engineering

- Legal instrument effect

- Police of large numbers

- Handling checks

- Multifactor blueprint of experiments software

- One-factor-at-a-time method

- Optimal design

- Plackett–Burman purpose

- Probabilistic design

- Protocol (natural sciences)

- Similar-experimental design

- Randomized block figure

- Randomized controlled trial

- Research design

- Robust parameter design

- Sampling size of it determination

- Concentrated design

- Purple Commission along Bewitchery

- View sampling

- System identification

- Taguchi methods

References [edit]

- ^ Peirce, Charles Sanders (1887). "Illustrations of the Logic of Science". Open Court (10 June 2014). ISBN 0812698495.

- ^ Peirce, Charles Sanders (1883). "A Theory of Verisimilar Illation". In C. S. Peirce (Ed.), Studies in logic by members of the Johns Hopkins University (p. 126–181). Little, Chromatic and Co (1883)

- ^ Stigler, Stephen M. (1978). "Mathematical statistics in the early States". Annals of Statistics. 6 (2): 239–65 [248]. doi:10.1214/aos/1176344123. JSTOR 2958876. MR 0483118.

So, Pierce's work contains unity of the earliest univocal endorsements of mathematical randomization as a basis for inference of which I am aware (Peirce, 1957, pages 216–219

- ^ a b Benjamin Peirce, Charles Sanders; Jastrow, Joseph (1885). "On Small Differences in Sensation". Memoirs of the Nationalist Academy of Sciences. 3: 73–83.

- ^ a b of Hacking, Ian (Sep 1988). "Telepathy: Origins of Randomization in Experimental Designing". Isis. 79 (3): 427–451. doi:10.1086/354775. JSTOR 234674. Mister 1013489. S2CID 52201011.

- ^ a b Stephen M. Stigler (November 1992). "A Historical View of Applied mathematics Concepts in Psychology and Educational Research". American Daybook of Education. 101 (1): 60–70. Department of the Interior:10.1086/444032. JSTOR 1085417. S2CID 143685203.

- ^ a b Trudy Dehue (December 1997). "Deception, Efficiency, and Hit-or-miss Groups: Psychology and the Gradual Origination of the Random Group Design". Isis. 88 (4): 653–673. doi:10.1086/383850. PMID 9519574. S2CID 23526321.

- ^ Peirce, C. S. (1876). "Note along the Theory of the Economy of Research". Coast Study Report: 197–201. , actually published 1879, NOAA PDF Eprint.

Reprinted in Collected Papers 7, paragraphs 139–157, besides in Writings 4, pp. 72–78, and in Peirce, C. S. (July–August 1967). "Note on the Theory of the Economy of Research". Operations Search. 15 (4): 643–648. doi:10.1287/opre.15.4.643. JSTOR 168276. - ^ Guttorp, P.; Lindgren, G. (2009). "Karl Pearson and the Scandinavian shoal of statistics". International Statistical Reassessmen. 77: 64. CiteSeerX10.1.1.368.8328. doi:10.1111/j.1751-5823.2009.00069.x.

- ^ Smith, Kirstine (1918). "Happening the standard deviations of adjusted and interpolated values of an observed polynomial serve and its constants and the counselling they give towards a victorian prize of the dispersion of observations". Biometrika. 12 (1–2): 1–85. doi:10.1093/biomet/12.1-2.1.

- ^ Johnson, N.L. (1961). "Sequential analysis: a survey." Journal of the Royal Statistical Society, Series A. Vol. 124 (3), 372–411. (pages 375–376)

- ^ Wald, A. (1945) "Sequential Tests of Applied math Hypotheses", Annals of Mathematical Statistics, 16 (2), 117–186.

- ^ a b Herman Chernoff, Sequential Analysis and Optimal Design, SIAM Monograph, 1972.

- ^ Zacks, S. (1996) "Adaptive Designs for Parametric Models". In: Ghosh, S. and Rao, C. R., (Eds) (1996). "Design and Analysis of Experiments," Handbook of Statistics, Volume 13. North-central-Holland. ISBN 0-444-82061-2. (pages 151–180)

- ^ Robbins, H. (1952). "Some Aspects of the Consecutive Design of Experiments". Bulletin of the American Mathematical Society. 58 (5): 527–535. doi:10.1090/S0002-9904-1952-09620-8.

- ^ Miller, Geoffrey (2000). The Mating Mind: how sexual tasty shaped the evolution of human nature, London: Heineman, ISBN 0-434-00741-2 (as wel Doubleday, ISBN 0-385-49516-1) "To biologists, he was an architect of the 'modern deductive reasoning' that used mathematical models to integrate Monastic genetics with Charles Darwin's selection theories. To psychologists, Fisher was the artificer of various statistical tests that are still supposed to be used whenever possible in psychology journals. To farmers, Fisherman was the founder of experimental agricultural research, saving millions from starvation finished rational lop breeding programs." p.54.

- ^ Creswell, J.W. (2008), Educational research: Provision, conducting, and evaluating quantitative and analysis research (3rd edition), Upper Saddle River, NJ: Prentice Hall. 2008, p. 300. ISBN 0-13-613550-1

- ^ Dr. Hani (2009). "Replication survey". Archived from the original on 2 June 2012. Retrieved 27 October 2011.

- ^ Burman, Leonard E.; Robert W. Reed; James Alm (2010), "A require replication studies", Public Finance Review, 38 (6): 787–793, doi:10.1177/1091142110385210, S2CID 27838472, retrieved 27 October 2011

- ^ Hotelling, Harold (1944). "Some Improvements in Deliberation and Other Experimental Techniques". Chronological record of Nonverbal Statistics. 15 (3): 297–306. doi:10.1214/aoms/1177731236.

- ^ Giri, Narayan C.; Das, M. N. (1979). Design and Analysis of Experiments. New House of York, N.Y: Wiley. pp. 350–359. ISBN9780852269145.

- ^ a b Jack Sifri (8 December 2014). "How to Use Innovation of Experiments to Produce Cast-iron Designs With High Yield". youtube.com . Retrieved 11 February 2015.

- ^ Simmons, Joseph; Leif Nelson; Uri Simonsohn (November 2011). "False-Positive Psychological science: Undisclosed Tractability in Data Collection and Analysis Allows Presenting Anything as Significant". Psychology. 22 (11): 1359–1366. doi:10.1177/0956797611417632. ISSN 0956-7976. PMID 22006061.

- ^ "Skill, Trust And Psychology in Crisis". KPLU. 2 June 2014. Archived from the original on 14 July 2014. Retrieved 12 June 2014.

- ^ "Why Statistically Significant Studies Can Be Light". Pacific Standard. 4 June 2014. Retrieved 12 June 2014.

- ^ Chris Chambers (10 June 2014). "Physical science envy: Do 'hard' sciences hold the solution to the replication crisis in psychology?". theguardian.com . Retrieved 12 June 2014.

- ^ Ader, Mellenberg & Turn over (2008) "Advising happening Inquiry Methods: A advisor's companion"

- ^ Bisgaard, S (2008) "Must a Process embody in Statistical Control before Conducting Fashioned Experiments?", Quality Engineering, ASQ, 20 (2), pp 143–176

- ^ Giri, Narayan C.; Cony, M. N. (1979). Design and Analysis of Experiments. Greater New York, N.Y: Wiley. pp. 53, 159, 264. ISBN9780852269145.

- ^ Montgomery, Douglas (2013). Intent and analysis of experiments (8th ED.). Hoboken, Garden State: John Wiley &adenosine monophosphate; Sons, Inc. ISBN9781118146927.

- ^ Walpole, Ronald E.; Myers, Raymond H.; Myers, Sharon L.; Ye, Keying (2007). Probability &ere; statistics for engineers & scientists (8 ed.). Upper Saddle River, Jersey: Pearson Prentice Hall. ISBN978-0131877115.

- ^ Myers, Raymond H.; Bernard Law Montgomery, Douglas C.; Vining, G. Geoffrey; Esme Stuart Lennox Robinson, Timothy J. (2010). Generalized running models : with applications in engineering and the sciences (2 ed.). Hoboken, N.J.: Wiley. ISBN978-0470454633.

- ^ Box, George E.P.; Hunter, William G.; Hunter, J. Stuart (1978). Statistics for Experimenters : An Introduction to Design, Data Analysis, and Model Building. Unaccustomed York: Wiley. ISBN978-0-471-09315-2.

- ^ Box, George E.P.; Hunter, William G.; Hunter, J. Stuart (2005). Statistics for Experimenters : Conception, Foundation, and Breakthrough (2 male erecticle dysfunction.). Hoboken, N.J.: Wiley. ISBN978-0471718130.

- ^ Spall, J. C. (2010). "Factorial Design for Efficient Experimentation: Generating Informative Data for System Identification". IEEE Control Systems Magazine. 30 (5): 38–53. doi:10.1109/MCS.2010.937677. S2CID 45813198.

- ^ Pronzato, L (2008). "Optimal experimental excogitation and some affiliated control problems". Automatica. 44 (2): 303–325. arXiv:0802.4381. doi:10.1016/j.automatica.2007.05.016. S2CID 1268930.

- ^ Moore, Jacques Louis David S.; Notz, William I. (2006). Statistics : concepts and controversies (6th erectile dysfunction.). New York: W.H. Freeman. pp. Chapter 7: Data ethics. ISBN9780716786368.

- ^ Ottoboni, M. Alice (1991). The dose makes the poison : a plain-language guide to toxicology (2nd ed.). New York, N.Y: Van Nostrand Reinhold. ISBN978-0442006600.

- ^ Glantz, Stanton A. (1992). Primer of biometrics (3rd ed.). ISBN978-0-07-023511-3.

Sources [edit]

- Peirce, C. S. (1877–1878), "Illustrations of the Logic of Scientific discipline" (series), Nonclassical Science Monthly, vols. 12–13. Relevant individual papers:

- (1878 March), "The Philosophy of Chances", Popular Skill Monthly, v. 12, March issuance, pp. 604–615. Internet Archive Eprint.

- (1878 April), "The Probability of Induction", Popular Science Time unit, v. 12, pp. 705–718. Internet Archive Eprint.

- (1878 June), "The Regularize of Nature", Popular Science Monthly, v. 13, pp. 203–217.Internet Archive Eprint.

- (1878 Noble), "Price reduction, Induction, and Hypothesis", Popular Scientific discipline Monthly, v. 13, pp. 470–482. Cyberspace Archive Eprint.

- (1883), "A Theory of Probable Inference", Studies in Logic, pp. 126–181, Little, Brown, and Caller. (Reprinted 1983, John Benjamins Publication Company, ISBN 90-272-3271-7)

External links [edit]

- A chapter from a "NIST/SEMATECH Handbook on Engineering Statistics" at NIST

- Box–Behnken designs from a "NIST/SEMATECH Handbook on Engineering Statistics" at NIST

- Careful mathematical developments of most green DoE in the Opera Magistris v3.6 online extension Chapter 15, section 7.4, ISBN 978-2-8399-0932-7.

what are the characteristics of a good experimental design

Source: https://en.wikipedia.org/wiki/Design_of_experiments

![{\displaystyle {\begin{aligned}{\widehat {\theta }}_{2}&={\frac {Y_{1}+Y_{2}-Y_{3}-Y_{4}+Y_{5}+Y_{6}-Y_{7}-Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{3}&={\frac {Y_{1}+Y_{2}-Y_{3}-Y_{4}-Y_{5}-Y_{6}+Y_{7}+Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{4}&={\frac {Y_{1}-Y_{2}+Y_{3}-Y_{4}+Y_{5}-Y_{6}+Y_{7}-Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{5}&={\frac {Y_{1}-Y_{2}+Y_{3}-Y_{4}-Y_{5}+Y_{6}-Y_{7}+Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{6}&={\frac {Y_{1}-Y_{2}-Y_{3}+Y_{4}+Y_{5}-Y_{6}-Y_{7}+Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{7}&={\frac {Y_{1}-Y_{2}-Y_{3}+Y_{4}-Y_{5}+Y_{6}+Y_{7}-Y_{8}}{8}}.\\[5pt]{\widehat {\theta }}_{8}&={\frac {Y_{1}+Y_{2}+Y_{3}+Y_{4}+Y_{5}+Y_{6}+Y_{7}+Y_{8}}{8}}.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8b6970abd1a6e69f062cddd667a1ea60088e94c8)

Posting Komentar untuk "what are the characteristics of a good experimental design"